Module 11: Logical Logistic Regression

Hi everyone!

This week we looked into logistic regression, where like other forms of regression analysis we have looked at we estimate dependent variables based on the effect of the independent variable. The unique aspect of logistic regression is the dependent variable is binary, so the outcome has two options like pass or fail, yes or no, etc.

The formula is: y = (e^(b0 + b1x)) / (1 + e^(b0 + b1x))

where y equals the output proportion between 0 and 1

The best b values will result in y being close to 1.

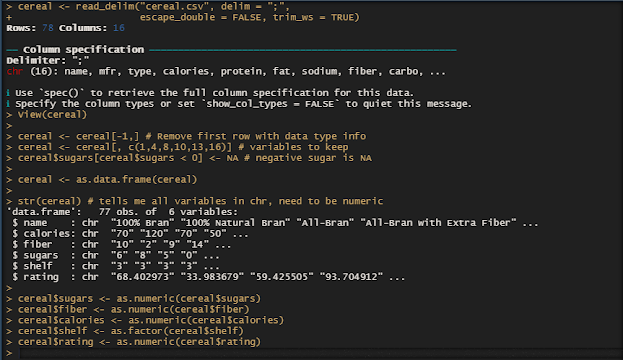

In R, we calculate logistic regression using the function glm().

10.1

1. Set up an additive model for the ashina data, as part of ISwR package.

2. This data contain additive effects on subjects, period and treatment. Compare the results with those with those obtained from t tests.

The treatment is significant in the ANOVA results at a p value of 0.01228. The t test of getting the treatment vs not shows a significant p value when we change the order of the treatment mean vs no treatment mean. There is some unbalance in group sizes making the tests for period and treatment effects based on order, thus the ANOVA results are slightly misleading that the treatment has significant results.

10.3. Consider the following definitions

a <- gl(2, 2, 8)

b <- gl(2, 4, 8)

x <-- 1:8

y <- c(1:4, 8:5)

z <- rnorm (8)

Note:

The rnorm() is a built-in R function that generates a vector of normally distributed random numbers. The rnorm() method takes a sample size as input and generates that many random numbers.

1. Generate the model matrices for models z ~ a*b, z ~ a:b, etc. In your blog posting discuss the implications. Carry out the model fits and notice which models contain singularities.

Comments

Post a Comment